“Coding that once took minutes can be reviewed in seconds, which adds up quickly across a full clinic day.”

As Commure’s Clinical Strategy Lead, Dr. Aabha Morey brings together her experience as a primary care physician in India and her work across healthcare technology to help organizations adopt AI thoughtfully and effectively.

In this edition of Commure Uncharted, she breaks down why coding remains such a burden for clinicians, how generative AI has changed the landscape, and what health systems should consider when evaluating autonomous coding tools and their ROI.

Q: Why is coding still such a persistent pain point for providers and health systems?

Clinicians are trained to care for patients, not to code. Their day is already filled with diagnosing, coordinating care, and documenting visits. Coding becomes yet another task layered on top of an overloaded workflow, and if it is not done or not captured correctly, the visit cannot be reimbursed. Because coding has never been core to medical training, it often falls through the cracks. This gap makes the process both burdensome for clinicians and well-suited for generative AI tools that can take on the administrative lift.

Q: Why is the industry finally ready for fully or near-fully autonomous coding after years of attempts?

The shift is largely driven by advances in generative AI. Models are improving every few months in how well they understand clinical text and even clinical images. At the same time, health systems are under increasing financial pressure from reimbursement cuts, so they are looking for tools that help them capture the revenue they have already earned. Human coding is imperfect and often leaves money on the table. With the technology now strong enough to interpret documentation with far greater depth and consistency, interest in autonomous coding has grown significantly.

There has also been a cultural shift. As generative AI has become more accessible, people can see its value firsthand in their daily lives, which makes them more open to using it in clinical settings. I also see health systems approaching adoption responsibly by focusing on accuracy and data standards. Overall, the industry is more welcoming of AI than ever before, especially in areas like coding that have been long-standing pain points.

Q: What kind of time savings can clinicians realistically expect, and how does that time translate back into patient care?

In a recent study with a customer using our autonomous coding solution, clinicians saved up to 83 percent of the time they previously spent coding. That is time they can redirect back to patients, their teams, or even themselves. In many cases, the savings can be even higher. What used to take three minutes to code manually now takes under thirty seconds because clinicians only need to review what the AI generated.

Q: How do you balance physician oversight with automation to maintain trust in the system?

Clinicians still review and sign off on every AI-proposed code. AI can make mistakes, but most issues we see stem from conflicting information within the note itself. The key is using established guardrails already familiar in coding. For example, code edits flag combinations that should not appear together. If a note triggers a code edit or reflects a higher-complexity visit, it can be routed for additional review.

Autonomous coding does not mean bypassing clinicians. Instead, it gives them a faster way to validate codes with clear rationale and citations from the note, which turns what used to be a lengthy search into a quick review.

Q: Do you think we will ever reach a point where clinicians no longer need to sign off on notes and the coding process becomes fully automated?

Yes, it is a matter of when, not if. Lower-complexity, highly predictable visit types already follow patterns that lend themselves well to full automation. Those cases could be coded without a human in the loop. Higher-complexity visits or notes with inconsistencies will still need clinician review. Over time, as models improve and guardrails like code edits mature, more of the routine coding work can move toward full autonomy.

Q: What key metrics should health systems track to assess the ROI of autonomous coding?

I usually recommend looking at three areas: revenue impact, workflow efficiency, and clinician quality of life.

For revenue, track changes in claim volume, denials, and time to submission. When we compare periods of autonomous coding to prior seasons with manual coding, we see more claims going out and fewer denials. Many organizations also recover revenue simply because claims are submitted faster.

For workflow efficiency, measure how much time and how many clicks clinicians save. Coding that once took minutes can be reviewed in seconds, which adds up quickly across a full clinic day.

And for quality of life, reduced administrative burden often shows up in lower burnout and higher satisfaction. At a recent conference, clinicians actually applauded when they heard about autonomous coding, which speaks to how much frustration coding has caused over the years.

Q: What are the biggest adoption barriers you see, and how can organizations overcome them?

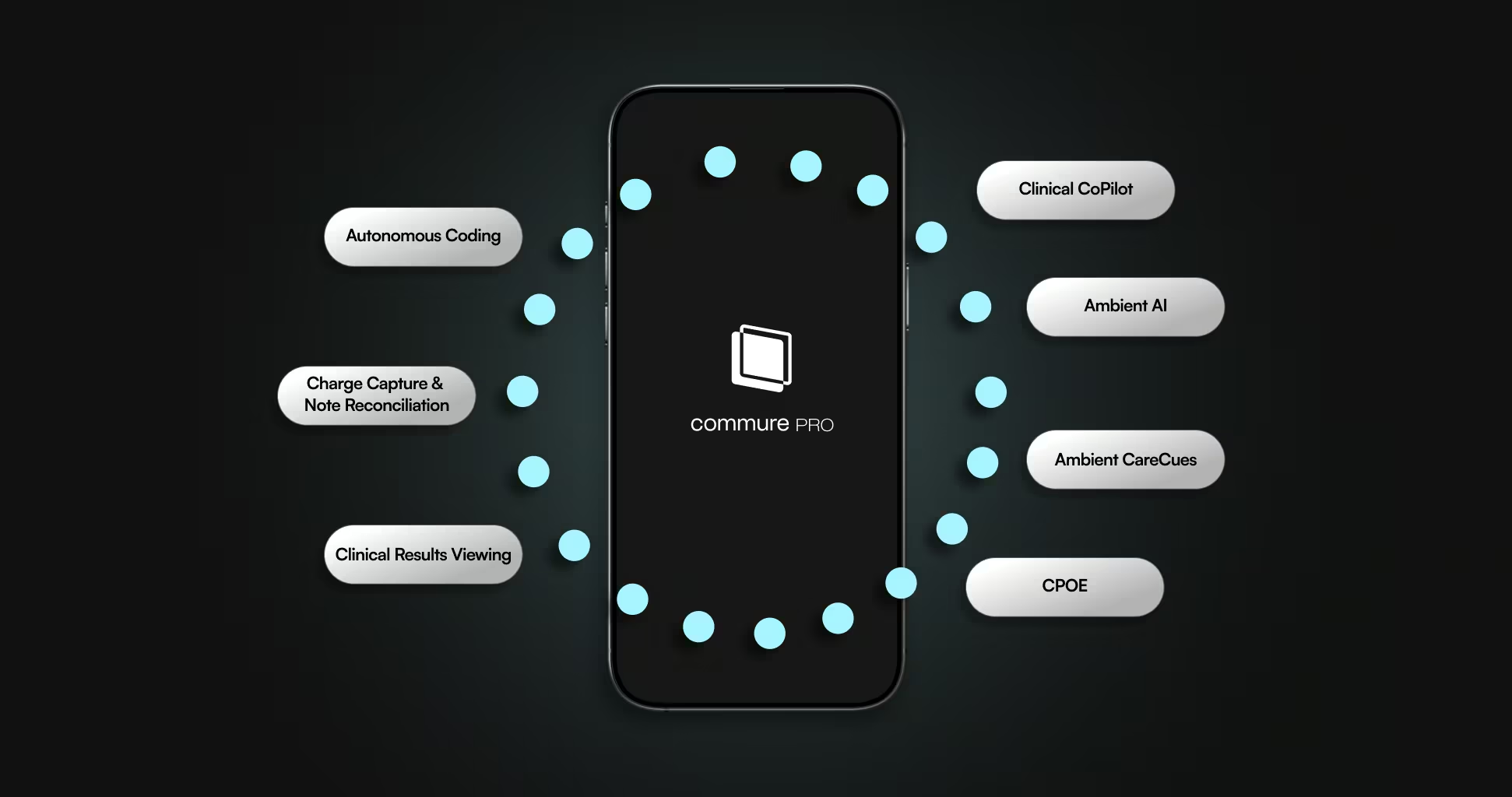

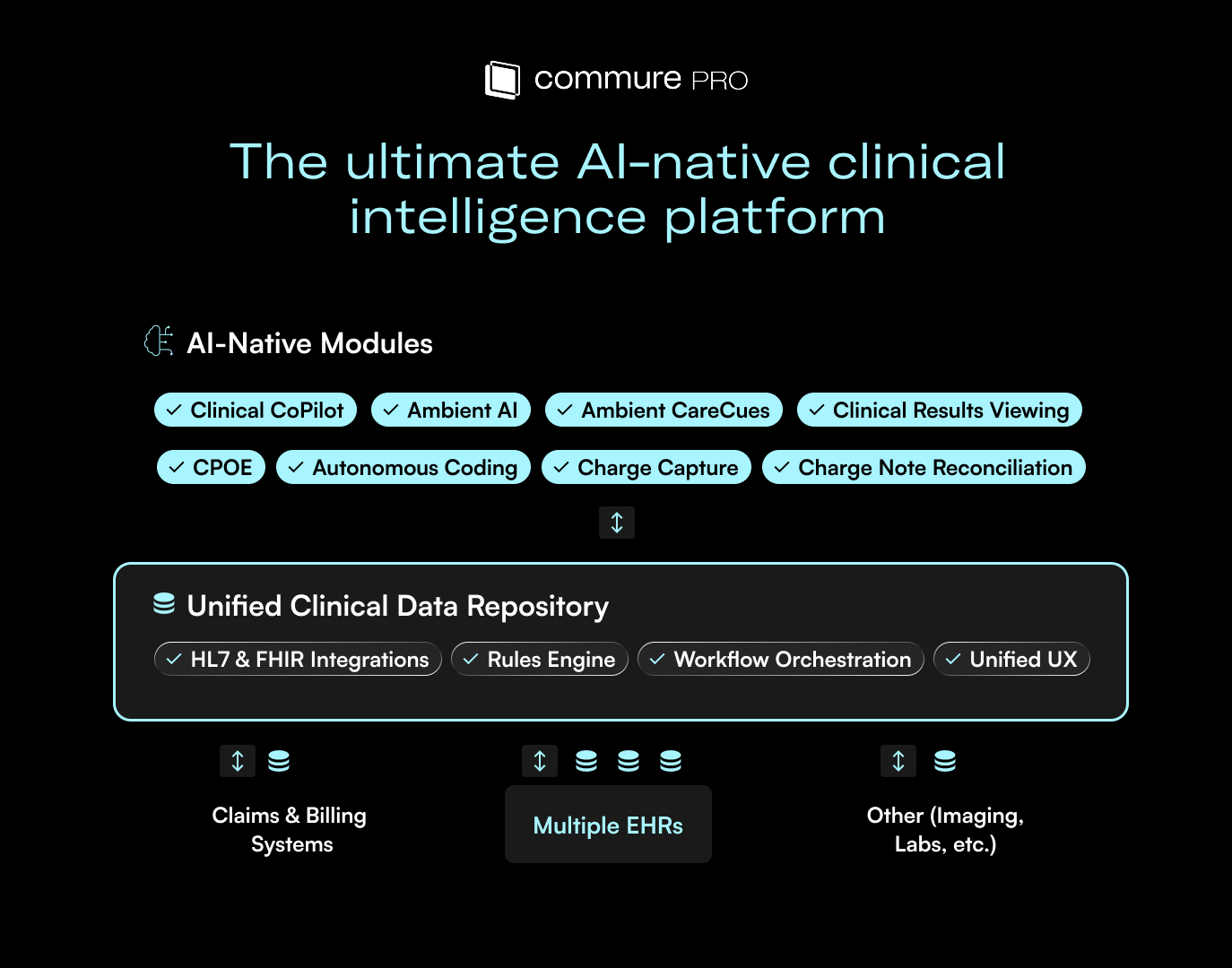

The first barrier is documentation quality. AI-generated codes will only be as accurate as the information in the note. Ensuring strong documentation standards and consistent templates goes a long way, and using the same ambient AI vendor as your autonomous coding vendor helps because upstream data flows cleanly into the coding model.

The second barrier is data flow from the EHR. Sometimes notes, especially those created by advanced practice providers, are not sent to the coding system until an attending co-signs them. That creates missed opportunities for autonomous coding. In several implementations, the remaining gap in utilization came down to notes that simply never reached us from the EMR. Working with the EHR team to make sure the right data is transmitted reliably is essential.

Q: What advice would you give a health system evaluating autonomous coding tools?

Start by looking at how well the vendor performs for your specific specialty. Many companies say they can handle everything from OB to neurology to facility coding, but accuracy often breaks down in areas like unspecified codes or laterality. Make sure you understand their real performance for the cases you see every day.

It is also a positive sign if a vendor tells you they need to fine-tune the model to your data. Documentation patterns vary widely across health systems, so an out-of-the-box model will never fit perfectly. Tailoring it to your environment is essential for accuracy.

Finally, consider what else the system can support. Coding is downstream from many other steps in the clinical workflow, so working with a vendor that also provides ambient documentation or intake can give you a more connected and accurate result. A shared backend ensures that all of the visit complexity is captured and reflected in the final claim.

Q: What makes Commure’s autonomous coding tools stand out from other solutions on the market?

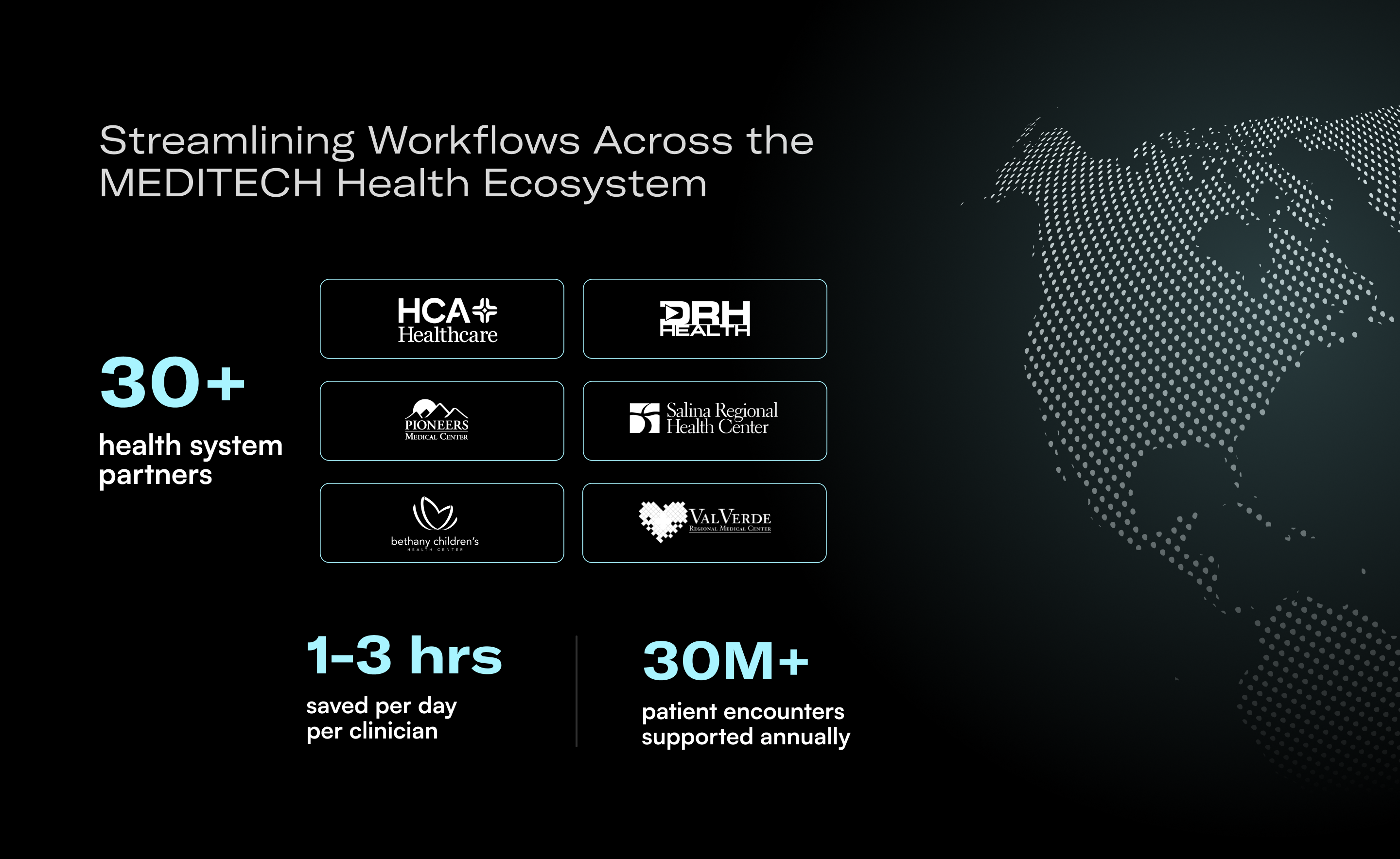

One major advantage is our forward-deployed engineering model. It is one thing to tune a model for a specialty and another to tune it for a specific health system with its own payer mix, documentation standards, and nuances. Our teams work directly with customers to tailor the model so it performs optimally and scales across departments and facilities.

Another differentiator is our shared architecture with Ambient AI. Because all of the patient conversations, pre-visit information, and historical context flow through the same system, the coding model has a far more complete clinical picture. That means it captures visit complexity, patient co-morbidities, and other details that often go uncoded with manual processes.

Together, those capabilities lead to more accurate, context-rich coding that reflects the full care delivered.

Want to learn more about autonomous coding?

.avif)

.png)