Welcome to Commure Uncharted, a Q&A series where we sit down with healthcare industry thought leaders to discuss what the future of AI in healthcare could look like.

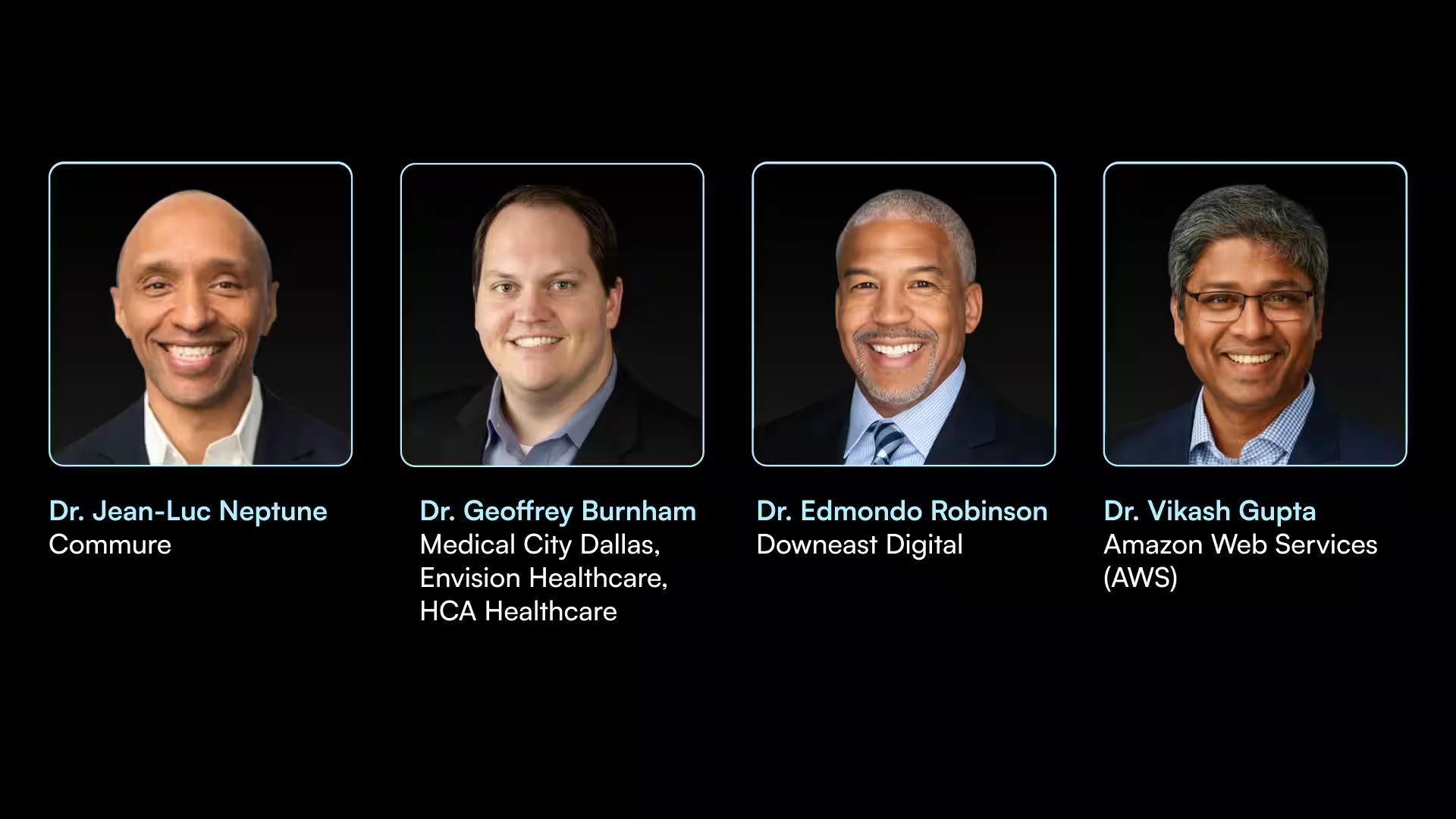

We are excited to be joined by Dr. Jean-Luc Neptune, MD, MBA. Dr Neptune has seen plenty of tech waves hit healthcare, but says this one feels different. As Executive Medical Director at Commure, he’s deep in the conversations shaping the future of AI-powered care. In this Q&A, he breaks down what’s more than just hype and how AI is reshaping trust, risk, and the role of the physician.

Q: What makes this wave of AI more transformative than past tech shifts in healthcare?

People forget how disruptive the mass adoption of the Internet was 20 years ago. It gave patients access to previously hard-to-access information, helped them look up symptoms or treatments, and shifted how they interacted with providers, for better and worse. But it didn’t really change how doctors practiced medicine.

What’s different now is that AI, especially the advances we’ve seen since the pandemic, is starting to shift how doctors actually think, diagnose, and recommend treatment.

We’re getting close to a point where patients could have healthcare encounters that are handled entirely by AI, without a human involved.

That kind of interaction would be a major shift from anything we’ve seen before.

If you had asked me five years ago whether a computer could handle an inbound patient question with 95% satisfaction, I would’ve said not a chance. But we’re on the verge of that now. We may not be ready to let AI run fully on its own, but the tech is at a point where it could handle a lot more than we’re currently allowing.

Q: Where do people most misunderstand AI’s role in clinical care?

The biggest thing people miss is how fast this technology is evolving. We tend to think about rate of change in linear terms, but tech often evolves in an exponential fashion. There have been decades of work behind the scenes on AI, and now it's finally starting to show up in real clinical settings. What feels advanced today will likely look basic in five to ten years or less. Most people aren’t prepared for how different things could look in a short period of time.

Q: As AI takes over more of the technical (administrative) tasks, what will define a great doctor?

What makes a great doctor hasn’t really changed; it’s still about communication. Patients remember the doctors who listened and connected with them. But the profession has long prioritized memorization and clinical reasoning. That’s what gets rewarded in medical school and residency.

As AI takes over more of those tasks, the real value will shift to how well a doctor can communicate, build trust, and leverage information.

When you encounter a brand-new patient in the clinic and you don’t know anything about that person, it takes skill and presence to collect what you need to make a diagnosis and build a care plan.

Beyond communication, doctors will need stronger management skills. Today, a typical primary care physician manages 2,000 to 3,000 patients. With the right technology to scale our clinical efforts, that number could grow massively - why not to 10,000 or more? AI can help identify who needs attention and when, but doctors will have to think more like care managers, not just clinicians. That shift isn’t emphasized in training today, but it’s going to become essential.

Q: Will physicians shift from diagnosing to orchestrating care?

I think you’d have to be naive to believe there won’t be a day when computers are better at diagnosis than people. We’re in the middle of that transition now as providers and patients are starting to see the potential, but are still adjusting to the idea.

At some point, maybe sooner than we expect, patients will trust the machine’s answer more than the doctor’s. Patients will ask their doctor, “What did the computer say?”

This mental shift happened long ago in areas like weather forecasting, where no one asks for a human opinion; they just check the model. Even as a physician, I’ve reached a point where I want to know what the AI thinks. When my wife had a mammogram recently, we opted for the AI readout (which cost an extra $25) because I believe it can add real value.

If AI handles more of the diagnostic work, then physicians will need to focus on what happens next. That means making sure patients get their medications, stay on their treatment plans, and understanding why they’re not following through if they aren’t. It also means shifting attention from what happens in the office to what’s happening outside the office. Patients spend most of their time outside the clinic, and that’s where their care often breaks down.

Q: With the rise of 24/7 AI-driven care, how do you see continuity of care evolving? Can technology really support long-term patient relationships?

As long as it’s clear that the technology is being used to support the care team, not replace it, patients are generally comfortable. When I worked at Memora (which was acquired by Commure in December 2024), that was always our message: this is a tool that helps your providers take better care of you. People were open to that and willing to engage.

As the technology improves, it becomes easier to imagine a different kind of patient experience. Instead of brief check-ins every couple of months, we could move toward continuous care, with your providers always present in the background.

In an ideal future, your doctor will be monitoring your status around the clock. Most of the time, nothing of clinical importance happens. But when something does (for example, your blood pressure spikes or your diabetes gets out of control), that’s the day your care team gets notified and steps in. That’s the kind of real-time responsiveness we’ve never had at scale, and that’s where this technology has real potential to raise the quality of care.

Q: Who’s accountable when AI gets it wrong: the doctor, health system, or developer?

That’s the big question, and it’s one I rarely see people address, probably because most people have never had to purchase malpractice insurance and think through the medicolegal questions associated with practicing. I purchased my own malpractice insurance for a number of years when running my mental health/substance use startup, and I can tell you that it really impacts your thinking and the risks you’re willing to take as a provider.

Right now, if there’s a bad clinical outcome, the physician and the provider organization are almost certainly going to get sued. But what happens when that bad outcome occurs because of a bad recommendation from an AI system?

If it’s a malfunctioning product, I know there’s a product liability framework that can be used to address any harms done to a patient, but it’s something we’re going to have to reckon with, especially as we move toward AI-only interactions.

In my opinion, we are already seeing this liability concern slowing adoption rates. Physicians don’t think in aggregate; they think in individual outcomes. One mistake can ruin a career, so providers tend to be very cautious in how they embrace new tools.

In the tech world, a 98% success rate is considered excellent. In healthcare, however, 98% isn’t good enough if the remaining 2% leads to real harm.

Q: What do you see as the most important opportunities—and risks—that AI brings to healthcare right now?

It’s a really exciting time, but big shifts like this can be unsettling. One of the many concerns we hear from customers is about job loss. That’s understandable, many people live with real financial insecurity, and the idea that new technology could make large parts of their workforce obsolete is hard to hear.

But AI also gives us a chance to finally address some of the biggest issues in healthcare. Thirty million people in the U.S. are uninsured. For many of them, even a solution that’s 90% as good as seeing a doctor, but costs $10 a month, could be life-changing. Too often, we treat “not perfect” as unacceptable when in reality, a lot of people have no access at all. We’re already seeing people use tools like ChatGPT to self-diagnose, which provides way more accurate results than when people first started using Google as an alternative to speaking with their physician. That’s not ideal (I think patients should be working with providers to access health resources), but it’s a signal that people are ready to engage if the tools are there.

Cost is the next challenge. We’ve been talking about rising healthcare costs since I was in business school over two decades ago, and they’ve only continued to rise. If we can use machines to deliver care more efficiently, we have a real shot at making the system more sustainable.

And then there’s quality. Doctors are human; we make mistakes, we fall behind on guidelines. AI can help raise the baseline by making sure we’re working with the best available information. There are still plenty of tough questions to answer, but the momentum is real. AI isn’t going away, and you can’t put the genie back in the bottle, so we have to figure out how to make it work.

.avif)

.png)